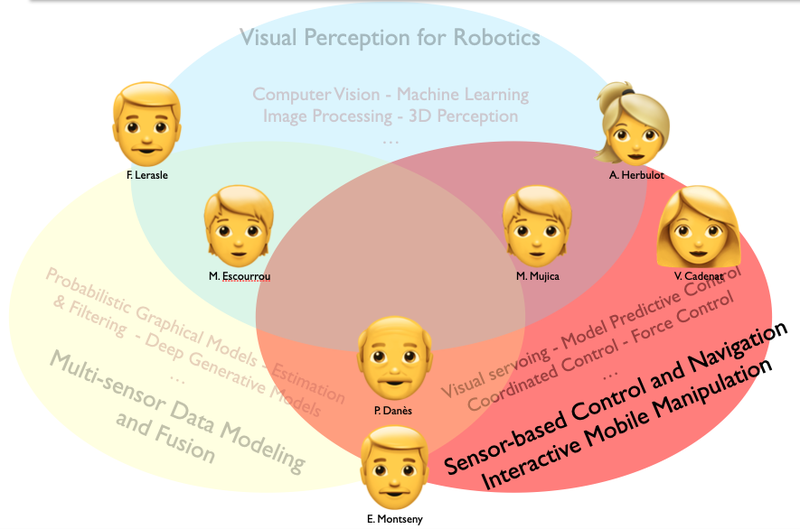

Sensor-based Navigation and Control -- Interactive Mobile Manipulation

Manipulation, mobile manipulation, navigation with exteroceptive sensor feedback

Considering a (manipulator, mobile, mobile manipulator) robot equipped with (2D, 3D) visual, (laser, LiDAR, RADAR) proximetric, and/or effort sensors, the objective is to perform positioning, navigation or manipulation tasks based on exteroceptive sensory feedback. A “(multi)sensor-based” approach allows the robust execution of these tasks in spite of multiple constraints or artifacts: absence of metric localization; variability of the environment; presence of dynamic obstacles; physical interaction with humans; etc. The underlying techniques relate to visual or multi-sensor servoing, predictive control, coordinated control, effort control, etc.

Monitoring of the environment of heavy vehicles

This project is at the confluence of the team's Visual Perception and Control activities. The application is to secure interactions between human operators and heavy mobile manipulators, which are sources of accidents. It involves the prediction of potential collisions by combining the fusion of sensor data (fish-eye cameras and on-board radars) and the characterization of the volume of the machines (analysis and prediction of the workspace). Sensor failures induced by difficult environmental conditions (dust, mud, weather) as well as the sporadic nature of the data (occultations, etc.) are major issues. The functions will be implemented on the first French high-performance on-board computer, developed by ACTIA.

France 2030 BPI PROTECH Project. Collaboration with the companies ACTIA, AgreenCulture... Contacts: Frédéric Lerasle and Martín Mujica.

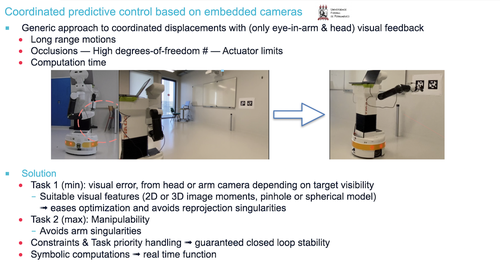

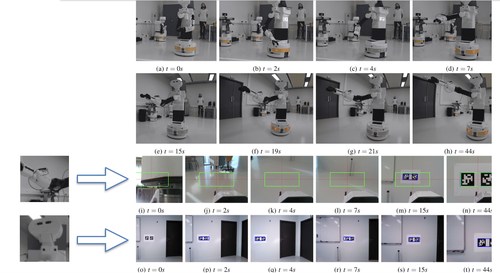

Coordinated control of a mobile manipulator robot by visual predictive control

The objective is to enable a mobile manipulator robot to perform base+arm coordinated movements by visual feedback on the images delivered by a hand-eye camera and a camera placed in the head. The issues are: the exploitation of redundancy; the management of occultations and actuator saturations; the design of an algorithm implementable in real time. The solution combines two tasks prioritized online in a judicious manner: error, to be minimized, defined in one or the other of the cameras on the basis of suitable visual cues; manipulability criterion, to be maximized. The optimization of the obtained hybrid task is carried out in compliance with the time constraints. A theoretical guarantee of closed-loop stability is obtained while keeping within the constraints.

Collaboration with Federal University of Pernanbuco, Brésil. Doctoral thesis of Hugo Bildstein (Funded by Toulouse III Paul Sabatier University via EDSYS Doctoral School). Contact : Viviane Cadenat.

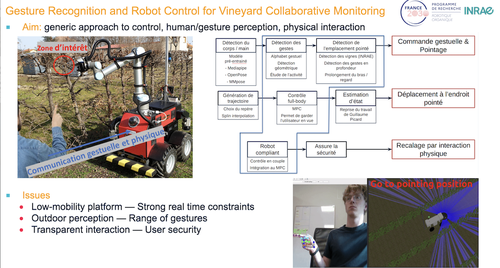

Control of a mobile manipulator robot in visual and physical interaction with a human operator

The application framework is collaborative vineyard monitoring. The goal is to define a generic approach to: the definition of gesture instructions for a mobile manipulator (visual analysis of the human operator; gesture detection and recognition; characterization of a pointing gesture); the coordinated movement of the mobile manipulator to the pointed location while maintaining visual contact with the user; the safe recalibration of the robot by physical interaction with the human. The low mobility of the base, the severe time constraints and the (outdoor) perceptual conditions make the problem difficult.

PI2 Action (Interactive Mobile Manipulation) of O2R PEPR. Doctoral thesis of Rémi Porée (Funded by 02R. Co-supervised with INRAE Clermont-Ferrand). Contact: Martín Mujica.

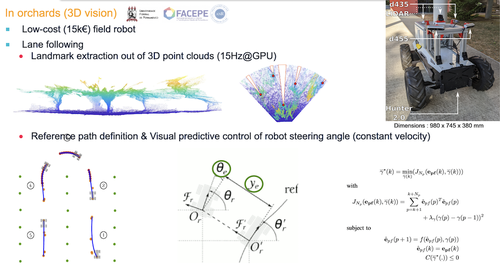

Sensor-based navigation in the absence (absolute or relative) metric localization

The navigation of a mobile robot in a vineyard or an orchard is considered, when only a prior topological information on the environment is available: rows, alleys, headland areas. Intra-row navigation exploits visual primitives extracted from 2D or 3D images (neural techniques, point cloud analysis, etc.) in control algorithms handling occlusions and unforeseen dynamic obstacles. The inter-row U-turn consists of the execution of spiral trajectories defined from laser primitives. An all-season, all-weather solution is obtained, which enabled error-free navigation in an orchard over an 800m path.

Collaboration with Naio, then ANR/FACEPE ARPON Project with Federal University of Pernambuco, Brazil. Doctoral thesis of Antoine Villemazet (Funded by ARPON). Warm thanks to the Cité des Sciences Vertes, Auzeville (31) and to the CEFEL, Montauban (82). Contact: Viviane Cadenat.

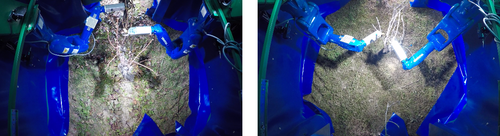

Robotics for Vine Pruning

The R2T2 (“Robot de Taille de Vigne”, i.e., Vine Pruning Robot) project aimed to design and develop up to the pre-industrial stage a robotic block. Once installed on a harvesting machine type carrier, this block would control it so as to autonomously prune vines trained in Guyot or Cordon de Royat. RAP's involvment in R2T2 concerned the following points: contribution to mechatronic integration (accessibility and optimal positioning of the manipulator arms, coll. FLDI company); motion planning for the manipulator arms, with no collision nor self-collision and fully exploiting their dynamics; integration of all the libraries developed by the partners in a dependable real-time software architecture (including communication with the carrier, coll. CNHi company); supervisor in charge of the concurrent execution and synchronization of the different functions (nominal case and degraded situations).

R2T2 was jointly funded by the FUI and the Occitanie Region (2018-2021+). Besides LAAS-CNRS, it involved the Vinovalie Cooperative (leader), the French Institute of Vine and Wine (IFV), ICAM Toulouse, the SMEs ORME and FLDI, as well as CNHi. Warm thanks to Agri Sud-Ouest Innovation Pole for its support, as well as to the GEPETTO and RIS robotic teams whose technologies, embodied by open-source software suites, were also integrated into the project. Contact: Patrick Danès.

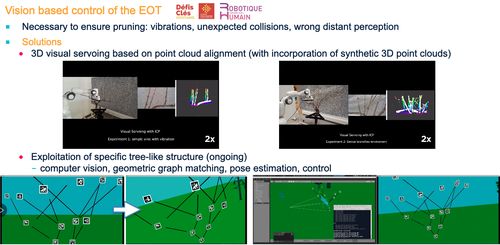

Following R2T2, the vision based control of the cutting organ of a vine pruning robot has been studied, according to two lines of research: combination of ICP (iterative closest point) point cloud alignment techniques and 3D servoing (with incorporation of setpoints synthesized by simulation); exploitation of structures specific to tree plants such as vines.

Doctoral thesis of Fadi Gebrayel (Jointly funded by the Human-Centered Robotics Key Challenge (Occitanie Region, via Toulouse University) and LAAS-CNRS/RAP). Contacts: Martín Mujica, Patrick Danès.

A Concert Japan project is under evaluation, involving French, German, and Japanese partners.