Note: this site is still under construction. In particular:

- The point clouds produced by a high-end stereovision algorithm are not yet available for all stereo pairs

- There is an issue (known and about to be solved) with the calibration for the stereo cameras front_cam and haz_cam

- Few information related to the way datasets have been collected, calibration, ... are yet to be provided.

The main information in the site that are missing are tagged as "TODO".

Overview

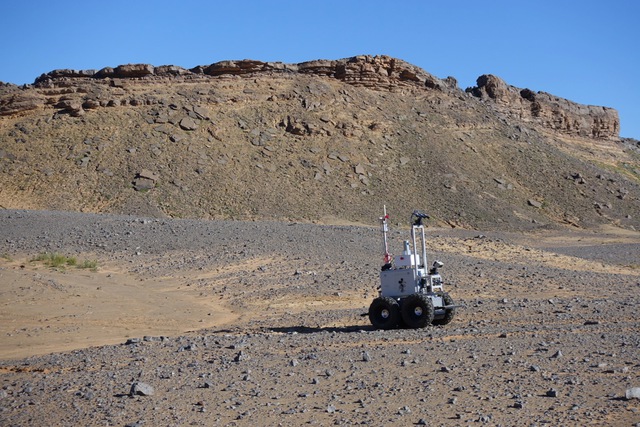

Yet an other robotics dataset! This site gathers a collection of data acquired by the two LAAS mobile robots Mana and Minnie on three different planetary analogue sites in the Tafilalet region of Morocco, in Nov/Dec 2018.

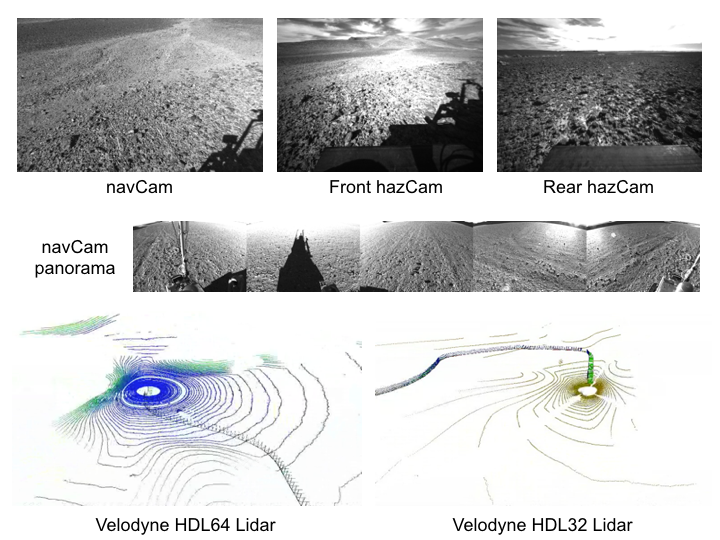

Mana is equipped with a panoramic Velodyne HDL64 Lidar. Minnie has a panoramic Velodyne HDL32 Lidar, and three stereoscopic benches: one mounted on a orientable turret on top of a mast, aka "navCam", and two fixed large field of view benches looking forward and backward, aka "hazCams". Both robots are endowed with wheel odometry, a high-end FoG gyro, a low cost IMU, and a cm accuracy RTK GPS for pose ground truth and all the data are precisely synchronized (details on the robot hardware are provided here),

On each site, a series of trajectories have been recorded, from a few hundreds of meters up to over one km long, along which data have been gathered at speeds ranging from 0.2 to 0.5 m/s, at different times of the day. Some trajectories have been executed forward and backward, and some involve both robots following simultaneously the same route. All in all, the dataset comprises about 110.000 geo-referenced stereoscopic image pairs and 40.000 Lidar scans, collected along 9 different trajectories totalizing 13 km.

The dataset can be used to test, evaluate and validate a large series of perception processes involved in planetary exploration robotics, from mere dense stereovision or visual odometry to more advanced processes such as multi-robot SLAM approaches, visual place recognition, absolute localisation with respect to orbital data, vision/Lidar fusion...

The three sites exhibit different morphological structures and are of varied geological natures. Aerial images have been collected by a SenseFly UAV and post-processed to produce a 4.5cm resolution digital elevation map (along with the associated orthoimage - see here). The sites are mars-like planetary analogues, and are of course representative of dry desert areas.

From left to right, the robot Minnie on the site of Kesskess, Mummy and Merzouga (click pictures for more details on the site geology)

Structure of the dataset

Various trajectories have been defined for each site (3, 4 and 2 trajectories for the Kesskess, Mummy and Merzouga sites respectively), and data have been recorded for both robots on each trajectory, at various speeds and times of day. Some trajectories have been replayed only once, whereas others have been replayed up to 5 times. Each trajectory replay constitute one independant dataset, split in as many files as sensors - the dataset file naming convention is depicted here.

Credits

The data acquisition campaign has been carried out in the context of the InFuse project, along with experiments held by the Facilitators project, both part of the H2020 European Program space robotics Peraspera roadmap. The acquisition of terrain ground truths with a UAV has been made possible thanks to the Automation & Robotics group of ESA. On site, we benefited from the support of the Ibn Battuta centre, which we warmly acknowledge.

If you use the data provided by this website in your own work, please use the following citation:

S. Lacroix, A. De Maio, Q. Labourey, E. Paiva Mendes, P. Narvor, V. Bissonette, C. Bazerque, F. Souvannavong, R Viards and M. Azkarate. The Erfoud dataset: a comprehensive multi-camera and Lidar data collection for planetary exploration. TODO

Links

A myriad of robotics datasets are nowadays available, the most famous for mobile robotics being certainly the Kitti dataset (which provides a list of similar datasets). Datasets relevant for planetary robotics are more scarce, and are to our knowledge restricted to this list:

-

The Devon Island Rover Navigation Dataset (TODO: and the smaller one http://asrl.utias.utoronto.ca/datasets/3dmap/ ?)

-

The Planetary Analogue Long Range Navigation Datasets from Mt. Etna

-

And finally the Nasa Planetary Data System, which gives access to most of the data acquired by the rovers Spirit, Opportunity and Curiosity (and which is hence hard to beat when it comes to realism)